File Collectors

Overview

DataBlend supports a variety of collectors which receive information from SFTP locations. Users can conveniently receive information from a local or remote SFTP. Excel (.xlsx), delimited (e.g. csv), Flat, and JSON files can be uploaded directly to a DataBlend stream. How the data is processed depends on the schema type and upload settings.

For more information about streams and their relationship to schemas and data sources, see Data Sources, Schemas, and Streams.

How to upload a file

To begin a file upload, browse to the schema that you wish to update.

A file can only be uploaded to an open stream from the stream details page.

Select a Stream

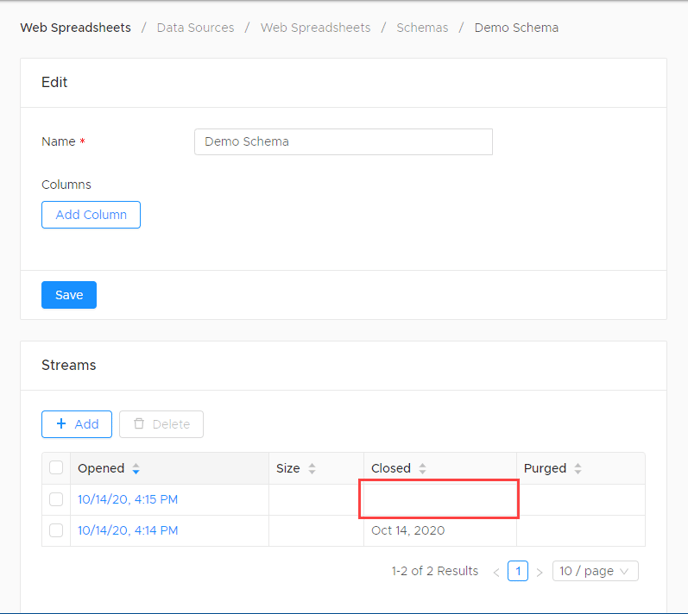

Look at the stream section for any open streams. (An open stream will not have a Closed date.)

If there is an existing open stream, decide if you want to upload to the existing stream or if you wish to create a new one.

|

If Schema Type Is… |

… Then Upload to Existing Open Stream Will… |

|---|---|

|

Default |

Add contents of file to existing contents of stream. |

|

Realtime |

Add contents of file to existing contents of stream. |

|

Single-file |

Overwrite existing contents of stream with contents of the file. |

If you do not wish to add the contents of the file to an existing stream, then close the open stream and create a new one.

If there is not an existing open stream, create a new one.

Upload a File

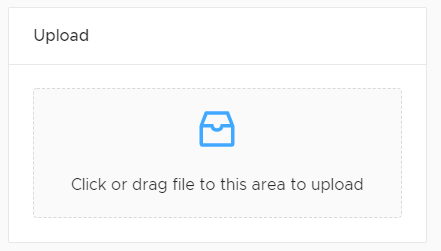

A stream which can be written to will have a file upload box.

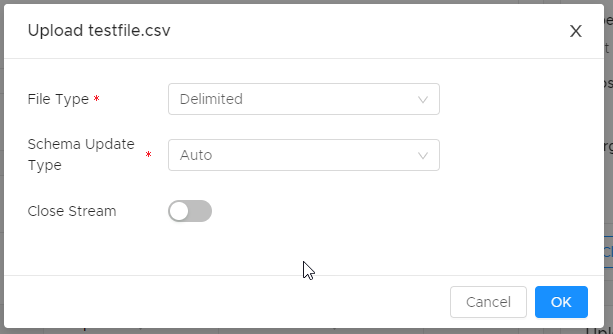

Drop the file on the upload box (or use the file selector to find it) and the upload settings box will open.

|

Option |

Description |

|---|---|

|

File Type |

DataBlend will identify the file type where possible, but this setting can be changed if necessary. |

|

Schema Update Type |

Add New Columns (formerly Add Only): Existing schema columns are preserved regardless of whether they exist in the first record collected. New columns identified in the first record collected are added to the schema. If the collection returns no records, the existing schema is unchanged. Recreate Columns (formerly Auto): Default. Schema is recreated from the first record collected. If the collection returns no records, the existing schema is unchanged. Preserve Columns (formerly Manual): No changes are made to the schema during collection. If the schema is changed by the file upload, data in previous streams may no longer be available. (Columns may “go missing”.) When in doubt, set to Add New Columns.

|

|

Leave set to false if:

Set to true if:

|

Archive

File Collection is now available for archive. If the archiving is not preferred, please select none from the drop-down menu. Copy will copy the file to Archive Path. Move will move the file data to a new path. Delete will delete file data upon collection.

Please note that when Copy or Move is selected as Archive Option the Archive Path is now required.

Bookmarks

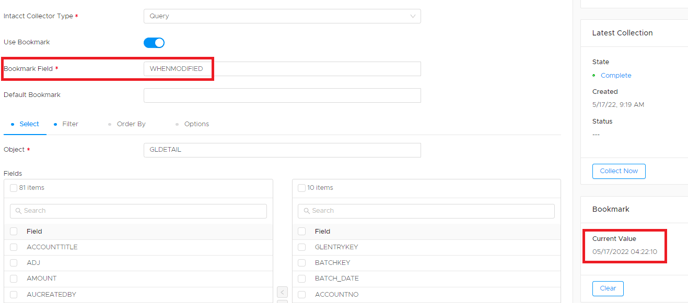

Bookmarks can be used on certain collectors to set a dynamic value each time the collector runs. A field from the collector source will need to be selected to be associated with the Bookmark function. For example, ‘WHENMODIFIED’ is a field in the Sage Intacct GL Detail object that can be used as a Bookmark:

Once the Bookmark Field is set, every collection will save the value based on the latest run time. In the example above, the Bookmark is stored as a 'WHENMODIFIED' date of '05/17/2022 04:22:10'. The next time the Collector runs, it will only pull values with a different value, and then will update the Current Value field dynamically.

Other Notes about Bookmarks:

-

Most commonly set as date fields, the Bookmark Field can also be a non-date field.

-

Filters on the collector can still be applied, so even though a Bookmark may be set, if a filter is present it will still only collect data with both the Bookmark value and filters in mind.

-

-

To accomplish this consolidation in the query, your FROM section will need to leverage ‘All Streams’. This will also carry a risk that if the bookmark’s ‘Current Value’ is cleared or the bookmark is removed, the same data can be included into a new stream and therefore overstate the dataset that is being used in the query. It is important to be mindful of which collectors and queries are relying on the bookmark functionality and avoid modifying the bookmark while in use. To reset the bookmarked data, make sure to clear the ‘Current Value’ and also purge all streams or create a new Schema so that you’re starting from an empty dataset.

Data gets collected into a new stream even when Bookmarks are present, the data does not automatically consolidate into one stream. Consolidation would need to be done in a query.

-

Details

-20211028-153128.png?width=481&height=443&name=Screenshot%20(139)-20211028-153128.png)

The details section documents who the Collector was created and updated by and the corresponding times. This allows for easy tracking of multiple Collectors.

Latest Collection

-20211028-153206%20(1).png?width=499&height=383&name=Screenshot%20(138)-20211028-153206%20(1).png)

The latest collection section documents the state of the collector, created time, and the status of the query. States include complete and error.

Collections

-20211028-153229%20(1).png?width=688&height=227&name=Screenshot%20(137)-20211028-153229%20(1).png)

The Collections section documents when the Collector was created, started, completed and the total amount of data scanned. The status includes information regarding the state of the Collector. This allows for easy tracking of multiple collections.

Upload History

File uploads will be run as jobs in the background. The number of records processed or any error encountered will be displayed in the Uploads history table.